Next-generation camera can better locate tumors

A few years ago, Edoardo Charbon, an EPFL professor and head of the Advanced Quantum Architecture Laboratory, unveiled a new, ultra-high-power camera called SwissSPAD2. His device was the first to be able to capture and count the very smallest form of light particle: the photon. It can also generate 3D images and calculate depth of field by measuring the amount of time it takes for a photon to travel from the camera to an object.

Learn more about the SwissSPAD2

Since then, Charbon has tweaked his invention even further. He sent it to a colleague at Dartmouth College in New Hampshire so that they could work together on the technology. By pooling their efforts, they were able to photograph, identify and locate tumors in human tissue.

Claudio Bruschini and Edoardo Charbon © Alain Herzog 2021 EPFL

Their method involves projecting red light onto an area of diseased tissue with a laser while the camera simultaneously takes a picture of the area. “Red is a color that can penetrate deep into human tissue,” says Charbon. The tissue is also injected with a fluorescent contrasting agent that attaches only to tumor cells.

A less than one-nanosecond delay

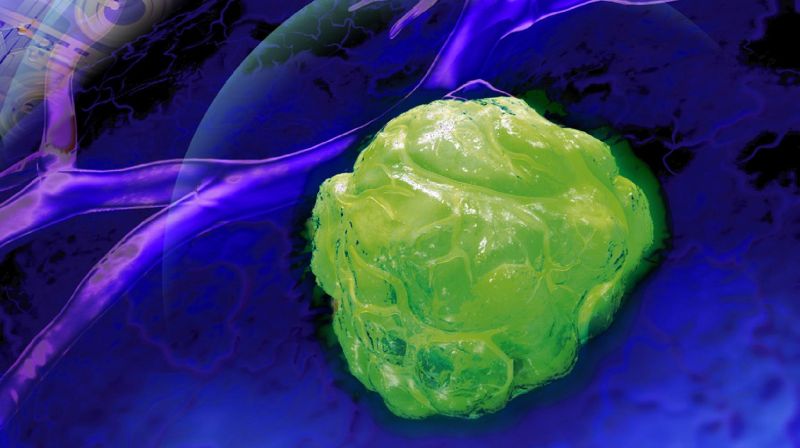

When the red light particles reach a tumor, they behave slightly differently from when they pass through healthy tissue. More specifically, it takes them longer to return to the point they were sent from. And it’s this time differential that gives scientists the information they need to reconstruct the tumor. “The delay is less than a nanosecond, but it’s enough for us to be able to generate a 2D or 3D image,” says Charbon. Thanks to this approach, their new system can accurately identify a tumor’s shape, including its thickness, and locate it within a patient’s body. The time lag is due to the fact that when red light comes into contact with a tumor, it loses some of its energy. “The deeper into a tumor the light travels, the more time it will take to return. That allows us to construct an image in three dimensions,” says Charbon. Until now, scientists have had to choose between identifying a tumor’s depth or its location. But with this new technology, they can have both.

Today, surgeons can use MRI to locate a tumor – but the task gets a lot harder once they’re in the operating room. Charbon’s technology aims to help surgeons with the delicate task of removing a tumor. “The images generated by our system will let them make sure they’ve removed all the cancerous tissue and that no little pieces remain,” says Claudio Bruschini, a scientist in Charbon’s lab. The research was recently published in Optica and could also be used in medical imaging, microscopy and metrology.