Time to get social: tracking animals with deep learning

The ability to capture the behavior of animals is critical for neuroscience, ecology, and many other fields. Cameras are ideal for capturing fine-grained behavior, but developing computer vision techniques to extract the animal’s behavior is challenging even though this seems effortless for our own visual system.

One of the key aspects of quantifying animal behavior is “pose estimation”, which refers to the ability of a computer to identify the pose (position and orientation of different body parts) of an animal. In a lab setting, it’s possible to assist pose estimation by placing markers on the animal’s body like in motion-capture techniques used in movies (think Gollum in the Lord of the Rings). But as one can imagine, getting animals to wear specialized equipment is not the easiest task, and downright impossible and unethical in the wild.

For this reason, Professors Alexander Mathis and Mackenzie Mathis at EPFL have been pioneering “markerless” tracking for animals. Their software relies on deep-learning to “teach” computers to perform pose estimation without the need for physical or virtual markers.

Their teams have been developing DeepLabCut, an open-source, deep-learning “animal pose estimation package” that can perform markerless motion capture of animals. In 2018 they released DeepLabCut, and the software has gained significant traction in life sciences: over 350,00 downloads of the software and nearly 1400 citations. Then, in 2020, the Mathis teams released DeepLabCut-Live!, a real-time low-latency version of DeepLabCut that allows researchers to rapidly give feedback to animals they are studying.

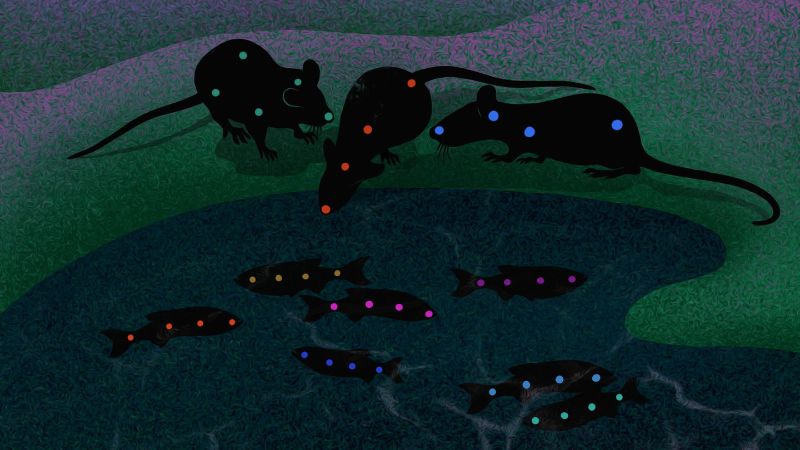

Now, the scientists have expanded DeepLabCut to address another challenge in pose estimation: tracking social animals, even closely interacting ones; e.g., parenting mice or schooling fish. The challenges here are obvious: the individual animals can be so similar looking that they confuse the computer, they can obscure each other, and there can be many “keypoints” that researchers wish to track, making it computationally difficult to process efficiently.

To tackle this challenge, they first created four datasets of varying difficulty for benchmarking multi-animal pose estimation networks. The datasets, collected with colleagues at MIT and Harvard University, consist of three mice in an open field, home-cage parenting in mice, pairs of marmosets housed in a large enclosure, and fourteen fish in a flow tank. With these datasets in hand, the researchers were able to develop novel methods to deal with the difficulties of real-world tracking.

DeepLabCut addresses these challenges by integrating novel network architectures, data-driven assembly (which keypoint belongs to which animal), and tailored pose-tracking methods. Specifically, the researchers created a new multi-task neural network that predicts keypoints, limbs, as well as the animal identity directly from single frames. They also developed an assembly algorithm that is “agnostic” to the body plan, which is very important when working with animals that can vary widely in their body shapes. These methods were validated on body plans from fish to primates.

Additionally, the scientists developed a method for identifying individual animals from video without any “ground truth” identity data. “Imagine the difficulty of reliability labeling which lab mouse is which,” says Mackenzie Mathis. “They look so similar to the human eye that this task is nearly impossible.”

The new algorithm is based on a metric learning with vision transformers, and allows scientists to even re-identify animals and continue tracking them when multiple animals hide from view and re-appear later. The researchers also used an appearance-based approach to analyze the behavior of pairs of marmosets across nine hours of video — almost a million frames. One of the insights from this approach was that marmosets, a highly social species, like to look in similar directions together.

“Hundreds of laboratories around the world are using DeepLabCut, and have used it to analyze everything from facial expressions in mice, to reaching in primates,” says Alexander Mathis. “I’m really looking forward to seeing what the community will do with the expanded toolbox that allows the analysis of social interactions!”